I’ve been looking for a good way to transfer images of the hard drives on my VAX-11/730 over to my modern computers for archival and other fun. My previous attempt to bring up TCP/IP networking on the VAX was not successful, so I tried a different approach this weekend. And it worked! Well, mostly.

Now before digging into the details, let’s describe the computer in question. The VAX-11/730 was small by VAX-11 standards, but it’s still a great big beast of a machine that takes up a lot of room in my little house. It also sucks lots of power and gives off a whole lot of heat, but luckily it was a cool and breezy weekend so I could open up windows to keep the room from getting too hot. My VAX-11/730 has 3M of RAM if I recall correctly. It has a 120 megabyte model R80 fixed hard drive, and a 10 megabyte model RL02 removable-back hard drive. It also has a 1600 BPI 9-track magtape drive, model TU80. The R80 drive came with hobbyist-licensed OpenVMS 7.3 on it, with a node name of PIKE and a node ID of 1.730. The previous hobbyist owner apparently had it clustered with another VMS machine named KIRK. The system also came with an RL02 pack containing VMS 5.3, apparently with a live installation from when this computer was in service. The 5.3 installation has a node name of PCSRTR and a node ID of 1.666, and was apparently part of a large network of dozens of VMS nodes used by a company in Wisconsin.

Ok, so this time I decided to teach a modern computer to speak DECnet, the VAX’s native networking language. After a lot of attempts and failures, I managed to set up a quite old Linux distribution in a virtual machine (VM) under VMware Fusion on my Mac Pro. Linux DECnet support was orphaned back in 2010.

I first tried a somewhat current distribution (Ubuntu 14.04 LTS) that still had the DECnet software available in its software repositories. Well, the code is there, but it seems to have suffered from bit rot and stopped working.

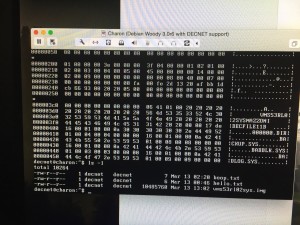

My next experiment was to install a much older Linux distribution from the era when the DECnet code was still actively developed and supported. In this case, I picked Debian "Woody" 3.0r6. It took a lot of trial and error to get it all working. I ended up installing a newer minor release of the Linux kernel from source code, 2.4.26 in this case, to get networking functioning at all under VMware.

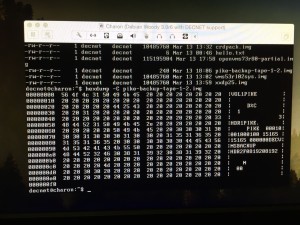

I had to run the VM in bridged network mode, because DECnet protocol requires that the ethernet MAC address match a specific pattern with the DECnet node ID encoded into it, and thus any computer speaking DECnet needs to be able to change its MAC address to match its node ID. This means that the VM just looks like an independent computer on my home network rather than sharing my Mac’s IP address with network address translation (NAT). I couldn’t get DHCP working on the VM, so I assigned it a static IP address. As we’ll see below, I had to get TCP/IP working on the VM even though I was just going to use it to speak DECnet. I named the VM Charon and gave it a DECnet node ID of 1.42.

I would normally transfer files on and off a VM using the folder sharing feature in VMware, which requires a device driver for the Host-Guest Filesystem (hgfs) device in the guest operating system’s kernel. Well, Linux 2.4 is pretty old, and I couldn’t get the driver to build. So instead, I just mounted an NFS export from my network-attached RAID box from the VM to get data on and off of it. This is why I had to have the TCP/IP networking functioning in the VM.

It took a lot of attempts at reconfiguring and recompiling the VM’s kernel to get the it to talk to the virtualized ethernet interface. I had the VAX running over in the other room, and when I finally typed the last linux command to get the DECnet software to talk to the network, I was thrilled to hear a beep followed by printing sounds from the DECwriter III console terminal in the next room as the VAX noticed the Linux VM appearing on the network. Hooray! I wish I could write down simple and concise steps to make this all work, but it was a long, frustrating process of trial and error.

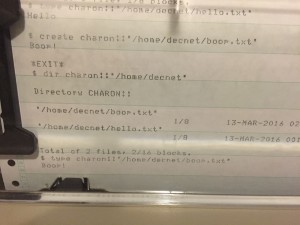

After that, it was even more thrilling to first read a small text file from the Linux VM on the VAX, and then create another file on the VM from the VAX and read it back.

With data flowing, it was now time to try imaging some disks. VMS doesn’t have a dd command like Unix, but instead you can mount a drive with the /FOREIGN qualifier to treat it as a raw device rather than a VMS filesystem, and then simply copy the device with the regular COPY command. In other words, something like this:

$ MOUNT/FOREIGN/NOWRITE DQA1:

$ COPY DQA1: CHARON:"/home/decnet/mydiskimage.img"

Since the disk to be imaged needs to be mounted with /FOREIGN, it can’t be the disk that the system is running from. Well, no problem: I should be able to boot OpenVMS 7.3 from the R80 drive to image packs in the RL02 drive, and then boot VMS 5.3 from the RL02 drive to image the R80 drive. I started off imaging that VMS 5.3 pack.

Success! Well, I don’t have a way to validate the contents of the FILES-11 volume image file I just created yet, but at least the file is the exact expected size and it has interesting looking data in it.

Next, I moved on to the Customer-Runnable Diagnostics pack that also came with the system. It seems to have transferred ok, too!

Then, I transferred over a pack labeled XXDP V2.5 that came with a separate purchase of RL02 packs. If the label is to be trusted, this pack should contain PDP-11 diagnostic programs.

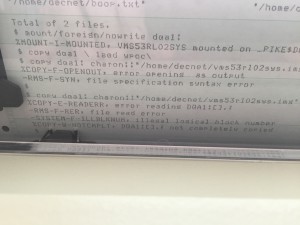

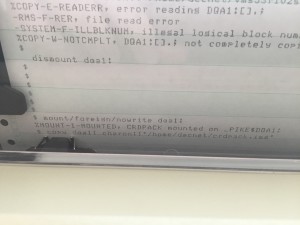

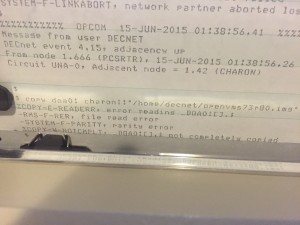

Finally, it was time to boot from the VMS 5.3 pack and transfer over the OpenVMS 7.3 installation on the R80 drive, and this is where the luck ran out. During my first attempt, I decided to go take a nap while the long copy occurred. Unfortunately, my Mac also decided to take a nap, and the copy aborted at around 90 megabytes when the VM was put to sleep. So I tried again, this time while awake and babysitting things. The copy proceeded further, but aborted with a parity error from the drive after transferring 224,992 out of 242,606 blocks. Maybe this was just from a bad block that’s mapped out in the filesystem, but exposed when reading the raw device? If I had a nice dd command then I would just try to resume the copy at the failing offset, or maybe one or more blocks after it, and then I could splice together the broken pieces later. I don’t know how to do that in VMS, though, so this will need to wait for another session.

Before shutting down the system, I tried copying over one of the backup tapes that I had previously made. There were no errors, but it just moved 240 bytes of data that apparently contain a volume label. I was hoping that I could image magtapes this way, but it looks like I haven’t cracked that code yet.

Well, at least I seem to have a way to transfer RL02 packs to my modern machines now. I might even be able to use this technique to write images to fresh RL02 packs.

Nice write up Mark.

I have a VAX-11/750, and after a few issues it starts up to the VAX command prompt.

As it is just 10 degrees Celsius in my “computer hangar” at the moment, I have to wait a few weeks before I start up an RA81 (the boot device).

By that time, I will certainly re-read your blog!

Thanks,

– Henk, PA8PDP

Now that the networking seems solid and you have some basic/safety copies, you should try using BACKUP instead of COPY. VMS BACKUP savesets are more robust, may get around your read error, and can certainly be used to restore to the same or different sized media – handy if you ever manage to connect a larger, more modern drive.

I’m encouraged by your success, thinking about the 11/730 I have sitting in storage. One of these days…

I have not figured out how to do a BACKUP across the network to a file on my Linux VM yet. If there is a way to do that, then I would like to learn how.

You know, you could boot an older version of NetBSD and use dd to image disks. Older versions could run in as little as 2 megabytes of memory, plus you can even netboot NetBSD via MOP and mount NFS, then dd straight to the NFS mount. A NetBSD VM could easily do both MOP and NFS.

http://www.heeltoe.com/retro/vax730/

Interesting! Thanks for the suggestion.